What is Parallelism?

-

Posted on November 18, 2010 by Derek Dieter

-

3

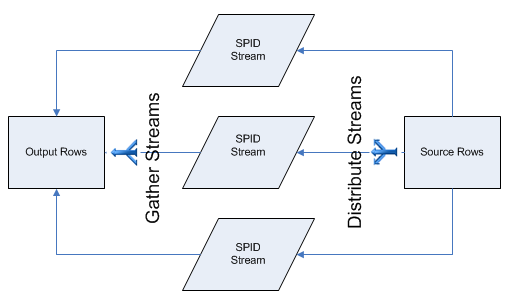

Parallelism is a feature in SQL Server which allows expensive queries to utilize more threads in order to complete quicker. The query optimizer makes the determination of how expensive a query is based upon the Cost Threshold for Parallelism setting set at the SQL Server Instance level. This cost threshold for parallelism is an estimate (roughly defined in seconds) that the query optimizer has determined a statement will take based on an estimated (or cached) execution plan. Generally the queries that qualify for parallelism are high IO queries. In a normal process, a source is read using a single SPID (Server Process ID) and it output using the same SPID. In parallelism, multiple SPIDs are used to read a source (this is known as distributing streams), then an operation may be performed in the streams, then the streams are gathered. Below is an illustration of this:

Although parallelism may seem like a good idea, often times it is not. For OLTP systems that facilitate a lot of user requests parallelism is usually an indication of poorly written queries and/or queries that are in need of indexes. It can also cause issues on servers that have a lot of processors that also have disk IO contention. The reason for this is because parallelism will by default spawn as many SPIDs as their are processors. In other words, if you have a rogue query joining a hundred million records that does not qualify for parallelism, then by itself, only one process will be reading the hundred million records. However if it qualifies for parallelism and the server has 16 processors, then you will now have 16 threads each trying to get a piece of the hundred million records powered by their own processor. I’ve seen this bring many servers to their knees.

One indication that parallelism is occurring on your system is when you run sp_who2 and you see the same SPID listed more than once. This is indicative of a large query being broken into multiple streams only to join back into a single result later. Some streams may finish their operations prior to other streams completions. When this happens it results in a wait type of CX_Packet. When this wait type is common, then you are waiting for parallel streams to finish.

As a summary, you do not want parallelism to be enabled unless you are sure that:

- You have more than enough resources

- You have a well optimized SQL Server

- Your server is mainly used for ETL purposes

- Comments (RSS)

- Trackback

- Permalink

I have browsed tens of websites trying to understand what is ”Parallelism” none of them helped me ,all of them full of Jargon, I like your analogy explaining this topic,

Parallelism behaves performantly if there are as many files as there are cores. This is because SQL Server can thread per core, but a core can only have I/O to one file at any given CPU Cycle. If each core can get I/O to a single file, with a single table spread out across the multiple files in the File Group, then Derek’s observation about parallelism bringing a server to it’s knees will be moot. A server will only be brought to it’s knees from parallelism if the files are not correctly configured, and if all the tables are on one single MDF in the PRIMARY file group.

By the way, thank you for this explanation Bob. I never thought about how many files being the contention in regards to the parallelism issues I’ve experienced in the past. Thank you for this insight. I do have to say however that I’ve seen parallelism consume too many resources even when all the data it’s accessing is in the buffer pool. In other words it’s not even touching the MDF’s. Since each SPID has access to it’s own processor it can consume too much CPU. Not to mention the memory overhead of an additional SPID.

Derek