Troubleshooting SQL Server Slowness

-

Posted on June 20, 2009 by Derek Dieter

-

4

The first step in diagnosing SQL Server performance is to determine the physical bottleneck with the most contention. Contention in one of the following areas does not always mean that subsystem is performing poorly. It could just as well be improper utilization due to poor tuning. Nevertheless, identifying the bottleneck is always the first place to start. Luckily, Microsoft does provide some unsupported but very useful tools to help us find this. For these, see the video Using SQLDiag and SQL Nexus. I still recommend reading the article below, as it will help understand the interrelation between subsystems.

There are ten main bottlenecks that can cause SQL Server to slow down. They are all interrelated and often fixing one causes another bottleneck to be revealed.

- CPU

- Memory

- Disk

- Paging

- Blocking

- Network IO

- Process Trimming

- Old Statistics

- Fragmentation

- Recompilation

Blocking

Blocking is caused by contention of resources. To understand blocking you need to understand locking. Locking occurs in order to ensure users see up-to-date accurate information. If records being updated are also being shown to a user before the update finishes, then inaccurate information is being displayed.

The most common reason for blocking is the lack of indexing or queries not written to utilize existing indexes. They mostly occur when update or delete statements are performing a table scan, while an incompatible lock like a select statement tries to read the same records.

This script will Show the blocking process

This script will show the table involved in the blocking

And this will show the queries that are most blocked

Once you find the blocker, you may need to kill it. To do so use the kill command:

[cc lang=”sql”]

–Replace the number below with the blocking SPID

KILL 5000

[/cc]

You will then want to determine if it is missing an index. If so, adding the appropriate index will likely get rid of the problem.

Old Statistics

If old statistics is your problem, you will likely experience this as a gradual decline of SQL Server slowing down over many days or weeks, or you may have just upgraded your platform (from 2000 to 2008) and forgot to update the statistics. Out of date statistics cause inaccurate execution plans. This problem is difficult to determine, so we should eliminate it first. Bad execution plans are many times a silent killer because the system can run severely slow, yet all other bottlenecks may be performing within an acceptable range.

First and foremost, turn on “auto update statistics” for the database. It is highly recommended to leave this on. Next, update all the statistics in the database. Keep in mind this may take time considering the sample rate for the statistics update. It is still highly recommended prior to troubleshooting.

It is also a good practice to rebuild or reorganize the indexes. Although this can likely be an intensive process to run during production hours.

CPU – (Historically Low)

When the CPU on a box that has historically been low becomes pegged, there is a lot of hope because this is usually the easiest fix. It is likely you have a run away query. The first thing you want to do is run sp_who2 (or sp_who3) and look for connections that have a SPID > 50 with abnormally high CPU time. The CPU time shown on sp_who2 is cumulative for the entire connection, so keep in mind if the connection has been open for days, the high CPU time might be plausible. Once you find a suspect connection, run the following command to see what is executing (sp_who3 already provides this):

[cc lang=”sql”]

DECLARE @SPID int

SET @SPID = yourspidhere

DBCC INPUTBUFFER(@SPID)

[/cc]

The input buffer will show you the command that is being executed. If it is determined to be rougue, kill it:

[cc lang=”sql”]

DECLARE @SPID int

SET @SPID = yourspidhere

kill @SPID

[/cc]

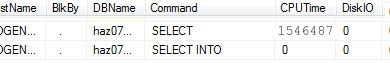

Another slowdown that may occur is Random SQL Server Execution plan hanging. To determine this, when looking at the CPU utilization for each session, take note of the amount of Disk IO being used. If you notice high processor utilization and very low IO utilization, then it is likely due to a spinning execution plan.

High CPU, little or no IO

This is a problem that has cropped up since SQL Server 2005. It is a small price to be paid for a smarter optimizer. The easiest way I have found to fix this is to simply rewrite the query. You can find more information here (Random SQL Server Execution plan hanging).

CPU – (Historically Medium/High)

For a CPU that has historically been high, the most common issue is a lack of indexes. This is an easy fix with the new DMVs introduced in SQL Server 2005. Usually adding indexes for the top 5 offending queries will resolve this issue. Follow this to Find Missing Indexes. Lack of indexes causes table scans which in turn eats up memory IO, disk IO and processor.

Memory

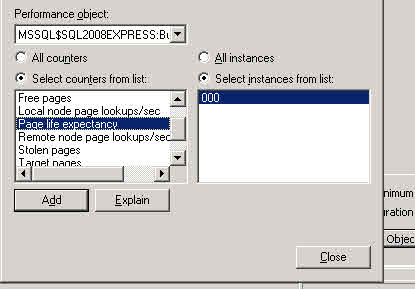

Likely the most important component of SQL Server is enough memory. With enough RAM, you can mitigate the number of times your disk needs to be touched. Ideally, the data that is queried often should always be in RAM. In order to accomplish this, enough RAM should be provided to ensure the Page Life Expectancy (PLE) remains over 300.

To verify the PLE:

- Open perfmon

- Select the Performance Object of MSSQLServer:Buffer Manager

- Highlight “Page Life Expectancy” and click Add.

The counter should remain over 300 seconds. This is the average amount of time SQL Server has estimated that each data page will be able to stay resident in memory until another process forces it out. If you see the PLE between zero and 100, then you definitely have a bottleneck and you need to Find the memory usage of the currently executing queries. If the query is run on a schedule and you weren’t able to catch it then you can Find the queries that have historically taken the most memory.

Additional information that may help is identifying the database taking the most memory

Disk

The biggest scapegoat for slowness is always the disk. (Yeah I said it). Yes, it is true that more often than not disks do not perform according to the ideal specifications. But aside from checkpoints and large data loads, it shouldn’t matter that much. Most customer facing OLTP systems I have worked with have been mostly read intensive. In almost all instances where the disk counters were indicative of under performing, it was a lack of both memory and indexing that contributed disk high disk queue length, thus hosing the disk. I would like to initially steer you clear of looking at the disk as being the problem.

Now, with that being said, here are the conditions where you will see disk bottlenecking:

- In almost all cases, the CPU % is very low because it is waiting for the disk.

- The server is write intensive, usually a data warehouse or a subscriber to a large replication.

- Import processes are written using full truncates and full inserts rather than delta inserts.

- Delete is used where truncate could be an option

Look here to help determine expensive IO read queries and determine expensive IO write queries

The only plausible instance where I needed management to change the disk was when IT setup a RAID 6 for my data drive.

So, if your CPU is low (less than 30%), and you verified the Page Life Expectancy (> 300) and cleaned the indexes, go ahead and blame the disk. But first, verify in perfmon under Performance Object -> Physical Disk:

- % Idle Time < 30%

- Avg Disk/Sec Transfer > .100

- Disk Queue Length < 20

Those are the only ones I typically use because most of the times Windows is assuming it is looking at a local disk, when most corporate SQL Servers run off a SAN. Also, to get accurate results, the interval for perfmon should be under 5 seconds.

Paging

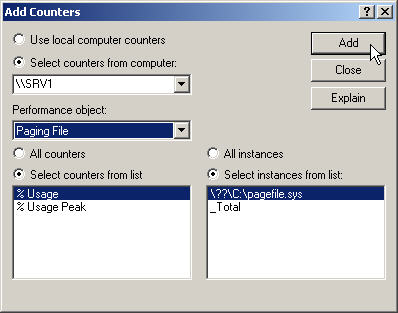

This is an issue that has cropped up with the advent of 64bit OS’s. Windows Server 2003 changed it’s priority for non OS processes. This severely impacted SQL Server and created a heck of a time for SQL Server support. What happens, is when the OS feels it is experiencing external memory pressure, it begins choosing victims to steal their memory. In which cases non system level process will find their working set swapped to the paging file. The most obvious indication of this is the amount of page file usage. It should preferably be under 8%. I have seen some SQL Servers run ok on 10% however this is pushing it.

To determine the page file usage open perfmon and select the Paging File performance object. Select both % Usage Peak and %Usage. Usage Peak is the peak amount that has how much has been used since the server last rebooted.

Paging File

To fix a paging file gone bad, unfortunately either requires a reboot, or sometimes you can get away with restarting SQL Server. The most important issue is why did it happen in the first place. Ideally, you want to provide the OS with enough room to breathe. And with 64-bit OS’s, this usually means a lot more memory than you are use to providing that for 32-bit. I only feel comfortable if my dedicated SQL Server box has a full 8 GB Free. Yes, that’s correct, 8 GIGA-BYTES. I know it sounds like a lot, however that is a valid threshold we determined with a SQL Server 2005 box that had trimming issues. (years from now people will be laughing at this post)

As for setup, it is recommended that (even on 64-bit OS’s) that you turn on AWE. I went around and around with this until I ended up speaking with someone from the highest level support at SQL Server Support. This person had access to the developers who looked up source code in helping with our issue. I explained to him that it doesn’t seem like we need AWE turned on because 64-bit can map the memory itself. He replied, I know, but just turn it on. And that was enough for me.

Some other things to keep in mind, are large data transfers. DO NOT use your SQL Server box to copy or move files across the network. Instead have another box come and get the file. Do not waste your precious memory on file operations. Here is a Microsoft KB: How to reduce paging of buffer pool memory in the 64-bit version of SQL Server

Process Trimming

This is very much related to paging and some of the same symptoms can be seen. What we were referring to regarding paging in the section above was Buffer Pool paging. Process trimming refers to working set paging. The difference however is subtle. It requires an explanation between the difference of “Private Bytes” and “Working Set”. The working set is the amount of memory the OS has granted to a process for usage. According to the process, it has the entire working set as it’s playground. However, the actual amount of physical memory it has is the private bytes which is a lower amount. The difference between the working set and the private bytes can be found in the glorious paging file. So if you notice a large disparity between private bytes and the working set, you are experiencing something known as process trimming. I hope you never have this issue, and you should not have this issue if you give the OS enough room to breathe. There are plenty of articles that delve deep into this subject so I will leave the experts to explain the specifics. The point is, give the OS enough room to breathe. Here is a performance team article on the subject: SQL and the Working Set

Additional Resources

For an excellent resource that details the internal architecture of SQL Server along with bottleneck analysis, I recommend reading Professional SQL Server 2008 Internals and Troubleshooting.

- Comments (RSS)

- Trackback

- Permalink

🙂 the horror 8 GIGAbytes. heh made me chuckle,

Jon, we’ve struggled with what to do about mesrebmhip, and still are. What would/could we offer that would justify a paid mesrebmhip?I’ve long hoped we could exert some influence. I still have visions of once a quarter PASS urging our members to vote up 10 items on Connect. When a 1000 votes is a big deal right now, imagine if we drove 10k votes on some of those annoying but still important flaws we all live with!

Derek, in the ‘DISK’ section, links

‘determine expensive IO read queries’ and ‘determine expensive IO write queries’ refer to the same page. Looks like the IO Write link is not pointing to the correct page.

Hi Derek,

Great stuff! Found this article very useful, thanks very much for posting. I will be spending quite a lot more time on your site!

Thanks,

Paul

Great Article. I’ll definitely be referencing this in future so don’t you dare to change the link!

Hi Derek,

It would be helpful if you tell me how to change the PLE value?

Thanks in advance.

HI Xenthil,

Unfortunately the PLE is not a value that can be changed. The only way to get the PLE higher is to find the memory condition that is causing you to run out of memory. In most cases, it is missing indexes. When you are missing indexes, then table scans read in every row and cause memory issues. Try the following DMV to find the missing indexes:

http://sqlserverplanet.com/dmv-queries/missing-indexes-dmv/

Usually if you get the top 5 – 10, you will see a dramatic increase.

Good Luck!

Derek

There should not be any prloebms on Windows 7, 32- bit (just make sure to choose the 32-bit installation). As for VS 08, it installs SQL Server Express (I think 2005), so that is a minor issue. It means that when you go to install 2008 R2, you either have to use a different named instance, like SQLEXPRESSR2. Or you have to uninstall SQL Server after VS 08 installs and before you try to install SQL Server 2008 R2.Then you can install R2 with the SQLEXPRESS named instance.